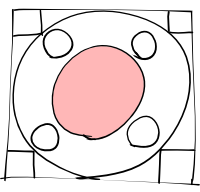

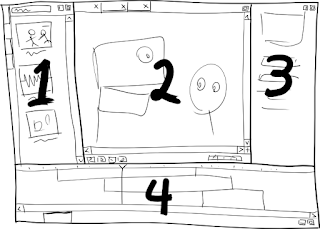

- regular keyframes -used internally to represent recorded motions; under normal circumstances they are not directly manipulated by the user

- target frames - normally manipulated "by hand", and are better suited for making fine adjustments to a previously recorded animation (though nothing prevents you from using target frames exclusively in your animation if you want to do that)

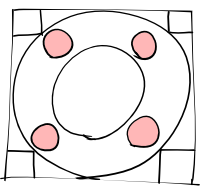

Interpolation between regular keyframes always uses smooth interpolation, but smooth interpolation may be toggled on and off on a per-target-frame basis (it's off by default); a segment between two target frames, where neither is "smooth", is simply linearly interpolated. I don't know what interpolation method should be used for smooth interpolation yet, but in order to eliminate "bounce" and "overshoot", monotonicity in the keyframes should be preserved by the interpolation function.

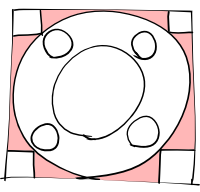

While target frames are usually created and modified by moving things around in the normal editing mode, regular keyframes are created (and overwritten) in "record" mode (besides recording, ranges of them can be smoothed or cleared, but they aren't otherwise manipulable). When you record a segment of motion, the initial and final frames become target frames, representing a simple linear path from start to end, and regular keyframes are created between them to capture the more complex recorded motion as deltas relative to that straight line.

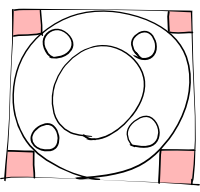

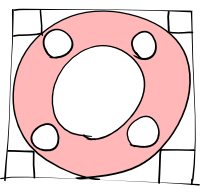

This lets you adjust the overall recorded animation simply by moving the target frames (you can even add new target frames in the middle for better control). Regular keyframes are locked in time with target frames, so that moving target frames in time will also move the regular keyframes between them. Moving two target frames apart in time will also expand the keyframe animation between them, and moving them closer together will compress it. It isn't possible to move one target frame past another in the same track.

Target frames are also useful in a different respect: when an animation is being rendered at a lower framerate than it was created at, Moing will make a reasonable effort to put each target frame on a separate frame within a track (with the usual stretching effect on keyframes) while preserving the general before/after relationship between target frames across tracks. This prevents the loss of brief cuts or actions between frames.